Apache Hive on Docker

As a big data enthusiast, if you are interested to learn Apache Hive, this blog will help you set it up on your local in few easy steps. This setup will serve as a good starting point for beginners as it will simulate the production environment.

This tutorial uses Docker containers to spin up Apache Hive. Before we jump right into it, here is a quick overview of some of the critical components in this cluster.

Apache Hive:

Apache Hive is a distributed, fault-tolerant data warehouse system that enables analytics of large datasets residing in distributed storage using SQL.

Docker:

Docker is an open-source technology to package an application and all its dependencies into a container.

NameNode:

The NameNode is at the core of the Hadoop cluster. It keeps the directory tree of all files in the file system, and tracks where the file data is actually kept in the cluster.

DataNode:

The DataNode on the other hand stores the actual file data. Files are replicated across multiple DataNodes in a cluster for reliability.

Specifically, if were to think in terms of Hive, the data stored on the Hive tables is spread across the DataNodes within the cluster. NameNode, on the other hand is the one keeping track of these blocks of data actually stored on the DataNodes.

We are using a single DataNode in this tutorial for the sake of simplicity.

Hive Metastore:

Hive uses a relational database to store the metadata (e.g. schema and location) of all its tables. The default database is Derby, but we will be using PostgreSQL in this tutorial.

The key benefit of using a relational database over HDFS is low latency and improved performance.

Volumes:

Volumes are the preferred mechanism for persisting data generated by and used by Docker containers.

Volumes also allow us to persist the container state between subsequent docker runs.

If you don’t explicitly create it, a volume is created the first time it is mounted into a container. When that container stops or is removed, the volume still exists. Specifically, in this use case, we are using volumes inside NameNode and DataNode containers to persist the HDFS file system. The volume on PostgreSQL container is utilized to persist the meta-data of the previously created Hive tables.

Pre-requisites:

The only pre-requisite is Docker. I’m running it on Macbook Pro with 8 GB RAM. Installing Docker is pretty straightforward. Please follow the instructions on https://docs.docker.com/docker-for-mac/install/ to install it if you do not have it already on your machine.

1. Directory Structure:

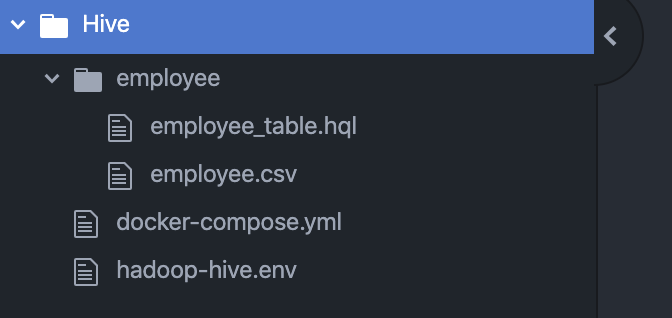

Create the directory structure on your local as shown in the image.

Fire up a terminal and run the below commands to create the directories and empty files inside them.

$ mkdir Hive

$ cd Hive

$ touch docker-compose.yml

$ touch hadoop-hive.env

$ mkdir employee

$ cd employee

$ touch employee_table.hql

$ touch employee.csv2. Edit files:

Open each file in your favorite editor and simply paste the below code snippets in them.

docker-compose.yml:

Compose is a Docker tool for defining and running multi-container Docker applications. We are using the below YAML file to configure the services required by our Hive cluster. The biggest advantage of using Compose is that it creates and starts all the services using a single command.

hadoop-hive.env:

The .env file is used to set the working enviornment variables.

employee_table.hql:

This HQL script will be later used in the tutorial to demonstrate the creation of a sample Hive Database and Table. As soon as docker spins up the hive-server container, this file will be automatically mounted inside it for use.

employee.csv:

This csv file contains some sample records which will be loaded into the employee table. Again, this file is automatically mounted by docker inside the hive-server container at start up.

3. Create & Start all services:

Navigate inside the Hive directory on your local and run the single docker compose command to create and start all services required by our Hive cluster.

$ docker-compose up4. Verify container status:

Allow Docker a few minutes to spin up all the containers. I use couple of ways to confirm that the required services are up and running.

- Look for the below message in the logs once you run docker-compose up.

- Run the command docker stats in another terminal. As docker begins to spin up the containers, their CPU and Memory utilization will stabilize after a few minutes in absence of any other activity on the cluster.

$ docker stats

5. Demo:

Its time for a quick demo! Log onto the Hive-server and create a sample database and hive table by executing the below command in a new terminal.

$ docker exec -it hive-server /bin/bashNavigate to the employee directory on the hive-server container.

root@dc86b2b9e566:/opt# ls

hadoop-2.7.4 hiveroot@dc86b2b9e566:/opt# cd ..

root@dc86b2b9e566:/# lsbin boot dev employee entrypoint.sh etc hadoop-data home lib lib64 media mnt opt proc root run sbin srv sys tmp usr varroot@dc86b2b9e566:/# cd employee/

Execute the employee_table.hql to create a new external hive table employee under a new database testdb.

root@dc86b2b9e566:/employee# hive -f employee_table.hqlNow, let’s add some data to this hive table. For that, simply push the employee.csv present in the employee directory on the hive-server into HDFS.

root@dc86b2b9e566:/employee# hadoop fs -put employee.csv hdfs://namenode:8020/user/hive/warehouse/testdb.db/employee6. Validate the setup:

On the hive-server, launch hive to verify the contents of the employee table.

root@df1ac619536c:/employee# hivehive> show databases;

OK

default

testdbTime taken: 2.363 seconds, Fetched: 2 row(s)hive> use testdb;

OKTime taken: 0.085 secondshive> select * from employee;

OK

1 Rudolf Bardin 30 cashier 100 New York 40000 5

2 Rob Trask 22 driver 100 New York 50000 4

3 Madie Nakamura 20 janitor 100 New York 30000 4

4 Alesha Huntley 40 cashier 101 Los Angeles 40000 10

5 Iva Moose 50 cashier 102 Phoenix 50000 20Time taken: 4.237 seconds, Fetched: 5 row(s)

7. Validate the container state between subsequent docker runs:

All good so far. You have successfully created a Apache Hive cluster on Docker.

It is critical to verify that the Hive tables are maintained between subsequent docker runs and we do not end up losing our progress if docker containers are stopped. Therefore, restart the docker containers and verify that the hive data is persisted or not.

In a new terminal, execute below command to stop all docker containers.

$ docker-compose downStopping hive-server ... done

Stopping hive-metastore ... done

Stopping hive-metastore-postgresql ... done

Stopping datanode ... done

Stopping namenode ... done

Removing hive-server ... done

Removing hive-metastore ... done

Removing hive-metastore-postgresql ... done

Removing datanode ... done

Removing namenode ... done

Removing network hive_default

Once all containers are stopped, run docker-compose up one more time.

$ docker-compose upWait for a few minutes as suggested in step 4 for the containers to come back online, then log onto the hive-server and run the select query.

$ docker exec -it hive-server /bin/bash

root@df1ac619536c:/opt# hivehive> select * from testdb.employee;

OK

1 Rudolf Bardin 30 cashier 100 New York 40000 5

2 Rob Trask 22 driver 100 New York 50000 4

3 Madie Nakamura 20 janitor 100 New York 30000 4

4 Alesha Huntley 40 cashier 101 Los Angeles 40000 10

5 Iva Moose 50 cashier 102 Phoenix 50000 20Time taken: 4.237 seconds, Fetched: 5 row(s)

Hurray! We still have our data!

Congratulations on setting up your hive server on Docker! Keep practicing!